Performance tests are typically executed to examine speed, robustness, reliability, and application size. Performance testing goals include evaluating application output, processing speed, data transfer velocity, network bandwidth usage, maximum concurrent users, memory utilization, workload efficiency, and command response times. Shortly, the goal of Performance Testing is not to find bugs but to eliminate performance bottlenecks.

Why should you test the performance of your system?

This will highlight improvements you should make to your applications relative to speed, stability, and scalability before they go into production.

We are doing performance testing, to:

- Help ensure your software meets the expected levels of service and provides a positive user experience.

- Determine whether the application satisfies performance requirements (for instance, the system should manage up to 1,000 concurrent users).

- Locate computing bottlenecks within an application.

- Establish whether the performance levels claimed by a software vendor are indeed true.

- Compare two or more systems and identify the one that performs best.

- Measure stability under peak traffic events.

While resolving production performance problems can be extremely expensive, the use of a continuous optimization performance testing strategy is key to the success of an effective digital strategy.

Without Performance Testing, the software is most likely to suffer from issues such as:

- Running slow while several users use it simultaneously.

- Inconsistencies across different operating systems.

- Poor usability.

What Does Performance Testing Measure?

Performance testing can be used to analyze various success factors such as response times and potential errors. With these performance results, you can confidently identify bottlenecks, bugs, and mistakes – and decide how to optimize your application to eliminate the problem(s).

The most common issues highlighted by performance tests are related to speed, response times, load times, and scalability.

Types of Performance Testing

First, it is important to understand how the software performs on users’ systems. Several types of performance tests can be applied during software testing. This is non-functional testing, which is designed to determine the readiness of a system.

There are a lot of performance test techniques:

Load testingAs many times as the expected number of users (for example 70users), a request is sent to the system for each scenario to be run and the results are observed.

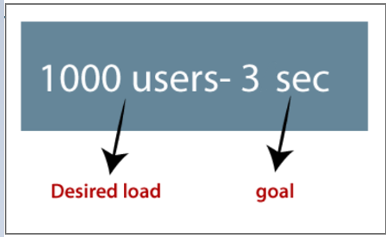

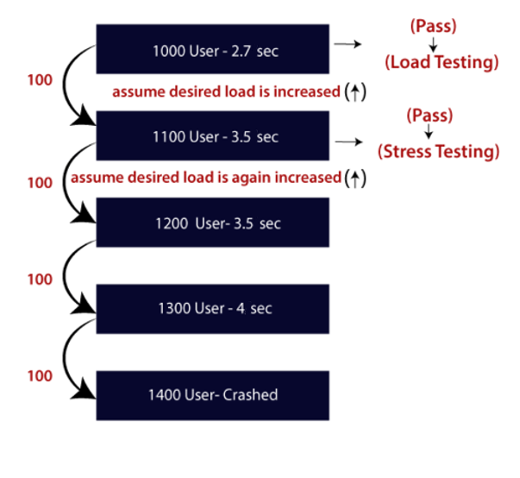

For example: In the below image, requests from 1000 users are the desired load, which is given by the customer, and a response time of a maximum of 3 seconds is the goal that we want to achieve while performing a load test.

“A full load test tells everything.”

“Loading testing is important because if ignored, it causes financial losses.”

“Load testing typically improves performance bottlenecks, scalability and stability of the application before it is available for production.”

It is tempting to just run a test at the total load to find all the performance issues. Except that that kind of test tends to reveal so many performance issues that it is hard to focus on individual solutions. Starting at a lower load and scaling up incrementally may seem like an unnecessarily slow process, but it produces easier results that are more efficient to troubleshoot.

Stress testingThe breaking point of the system will be determined by running the scenarios with the maximum number of users or more.

For example: If we took the above example and increase the number of users above the defined limit of 1000 users to 2000 users, the desired goal for this scenario also must increase. As of now, the load is much heavier than what the system is designed for (it is designed for 1000 users).

The goal then can be to get responses back in less than 6 seconds. If we achieve the desired result from the load test, we can also observe the limit of our system with stress testing.

With load test: Requests from 1000 users and 3 seconds. If it works without any errors or crashes, we can enhance our tests with stress testing.

With stress test: We are sending the system heavy load (For example requests from 2000 users). And we expect that this load gives us a successful response without any errors or system crashes. If we get any errors under this pressure, we must troubleshoot them. Afterward, we can discuss how we can increase the performance of the system.

Spike testi

Spike testi

Endurance testing

The run will start with the expected number of users (70 users), and it will be observed whether the system can meet the requests during the specified time (X hours).Volume testing

The behaviour of the system will be observed at variable database volumes and instantaneous load increases in the database.

Most Common Problems Observed in Performance Testing

During performance testing of software, developers are looking for performance symptoms and issues. Speed issues — (For example slow responses and long load times) — often are observed and addressed.

Other performance problems can be observed:

BottleneckingThis occurs when data flow is interrupted or halted because there is not enough capacity to manage the workload.

Poor scalabilityIf software cannot manage the desired number of concurrent tasks, results could be delayed, errors could increase, or other unexpected behavior could happen that affect:

- Disk usage

- CPU usage

- Memory leaks

- Operating system limitations

- Poor network configuration

- Software configuration issues — Often settings are not set at a sufficient level to manage the workload.

- Insufficient hardware resources — Performance testing may reveal physical memory constraints or low-performing CPUs.

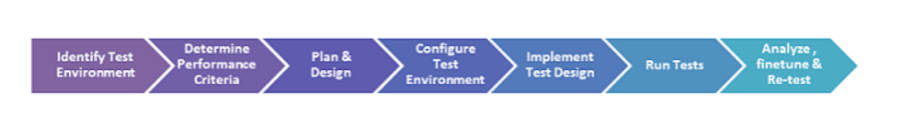

Performance Testing Process

The methodology adopted for performance testing can vary widely, but the objective for performance tests remains the same. It can help demonstrate that your software system meets certain pre-defined performance criteria. Or it can help compare the performance of two software systems. It can also help identify parts of your software system which degrade its performance.

1) Identify Your Testing Environment

Know your physical test environment, production environment, and what testing tools are available. Understand the details of the hardware, software, and network configurations used during testing before you begin the testing process. It will help testers to create more efficient tests.

2) Identify the Performance Acceptance Criteria

This includes goals and constraints for throughput, response times, and resource allocation. It is also necessary to identify project success criteria outside of these goals and constraints. Testers should be empowered to set performance criteria and goals because often the project specifications will not include a wide enough variety of performance benchmarks.

3) Plan & Design Performance Tests

Determine how usage is likely to vary amongst end users and identify key scenarios to evaluate for all possible use cases. It is necessary to simulate a variety of end users, plan performance test data and outline what metrics will be gathered.

4) Configuring the Test Environment

Prepare the testing environment before execution. Also, arrange tools and other resources.

5) Implement Test Design

Create the performance tests according to your test design.

6) Run the Tests

Execute and monitor the tests.

7) Analyze, Tune and Retest

After every performance test, analyze the finding and fine-tune the test again to see an increase or decrease in performance. Run the tests again using the same or different paramete

.Performance Testing Metric

Metrics are needed to understand the quality and effectiveness of performance testing. Improvements cannot be made unless there are measurements.

Two definitions that need to be explained:

Measurements — The data being collected such as the seconds it takes to respond to a request.

Metrics — A calculation that uses measurements to define the quality of results such as average response time (total response time/requests

- Processor Usage – the amount of time the processor spends executing non-idle threads.

- Memory use – the amount of physical memory available to processes on a computer.

- Disk time – the amount of time the disk is busy executing a read or write request.

- Bandwidth – shows the bits per second used by a network interface.

- Private bytes – the number of bytes a process has allocated that cannot be shared amongst other processes. These are used to measure memory leaks and usage.

- Committed memory – the amount of virtual memory used.

- Memory pages/second – number of pages written to or read from the disk to resolve hard page faults. Hard page faults are when code not from the current working set is called up from elsewhere and retrieved from a disk.

- Page faults/second – the overall rate at which fault pages are processed by the processor. This again occurs when a process requires code from outside its working set.

- CPU interrupts per second – is the avg. many hardware interrupts a processor is receiving and processing each second.

- Network bytes total per second – the rate at which bytes are sent and received on the interface including framing characters.

- Response time – the time from when a user enters a request until the first character of the response is received.

- Throughput – rate a computer or network receives requests per second.

- Maximum active sessions – the maximum number of sessions that can be active at once.

- Hit ratios – This has to do with the number of SQL statements that are handled by cached data instead of expensive I/O operations. This is a good place to start with solving bottlenecking issues.

- Hits per second – the no. of hits on a web server during each second of a load test.

- Database locks – the locking of tables and databases needs to be monitored and carefully tuned.

- Top waits – are monitored to determine what wait times can be cut down when dealing with how fast data is retrieved from memory

- Thread counts – An application's health can be measured by the number of threads that are running and currently active.

Performance Test Tools

We have different types of performance testing tools available in the market, where some are commercial tools, and some are open-source tools.

Commercial tools: LoadRunner, WebLOAD, NeoLoad

Open-source tool: JMeter (I would recommend Jmeter. Because it is an open-source performance testing tool, and it is a Java platform application.)

Best Tips for Performance Testing

- Test as early as possible in the development process.

- Do not wait and rush performance testing!

- Performance testing is not just for completed projects. There is value in testing individual units or modules.

- Conduct multiple performance tests to ensure consistent findings and determine metrics averages.

- Applications often involve multiple systems such as databases, servers, and services. Test the individual units separately as well as together.

- Involve developers, IT, and testers in creating a performance-testing environment.

- Remember real people will be using the software that is undergoing performance testing. Determine how the results will affect users not just test environment servers.

Five Common Performance Testing Mistakes

Some mistakes can lead to less-than-reliable results during performance testing:

- Not enough time for testing.

- Not involving developers.

- Are not using a QA system like the production system.

- Not sufficiently tuning software.

- There is no plan for troubleshooting.

Performance Testing Example

Let us take one example where we will evaluate the behavior of an application where the desired load is coming from either less than 1000 or equal to 1000 users.

Let us take one example where we will evaluate the behavior of an application where the desired load is coming from either less than 1000 or equal to 1000 users.

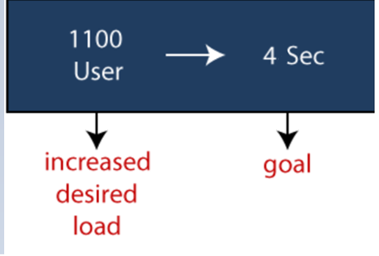

In the above image, we can see that the 100-up users are increased continuously to check the maximum load, which is also called upward scalability testing.

- Scenario1:

Our Goal: 1000 Users, 3 Second

When we have 1000 users as the desired load, and the result response time is 2.7sec, these scenarios will pass while performing the load test because the result time is under our 3sec goal.

- Scenario2:

Our Goal: 1100 Users

In the next scenario, we will increase the load by 100 users, our response time will go up to 3.5sec. This scenario will pass as we perform stress testing and are using more than 1000 users. The actual load (1100) is greater than the desired load (1000). No clear goal is defined. It is important just to ensure that the system works.

- Scenario3:

In this scenario, if we increase the desired load three times as:

1200 → 3.5sec: [the response time is greater than 3 seconds, but it is acceptable as the load is above the desired load]

1300 → 4sec: [the response time is greater than 3 seconds, but it is acceptable as the load is above the desired load]

1400 → Crashed [the system crashed as the load is much higher than the desired load.]

-When we did this test with 1000 users, we got responses back in 2.7 seconds. When we send 1100, 1200, and 1300 users to the system, we should see response times longer than 3 seconds. The system is working but the response time is slower. But when we tested with 1400 users the system could not process the responses and stopped working.

Hope you learned something new!